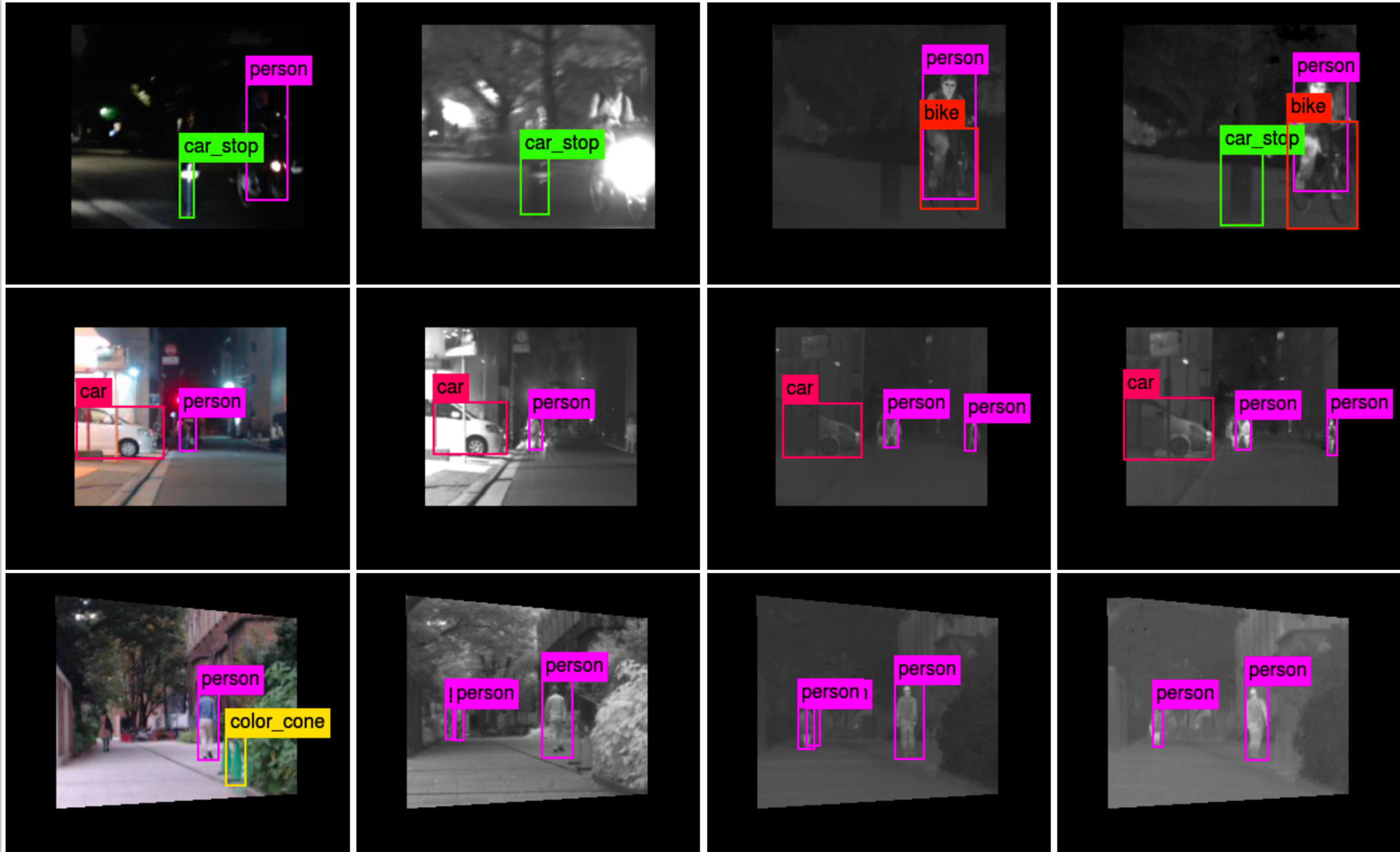

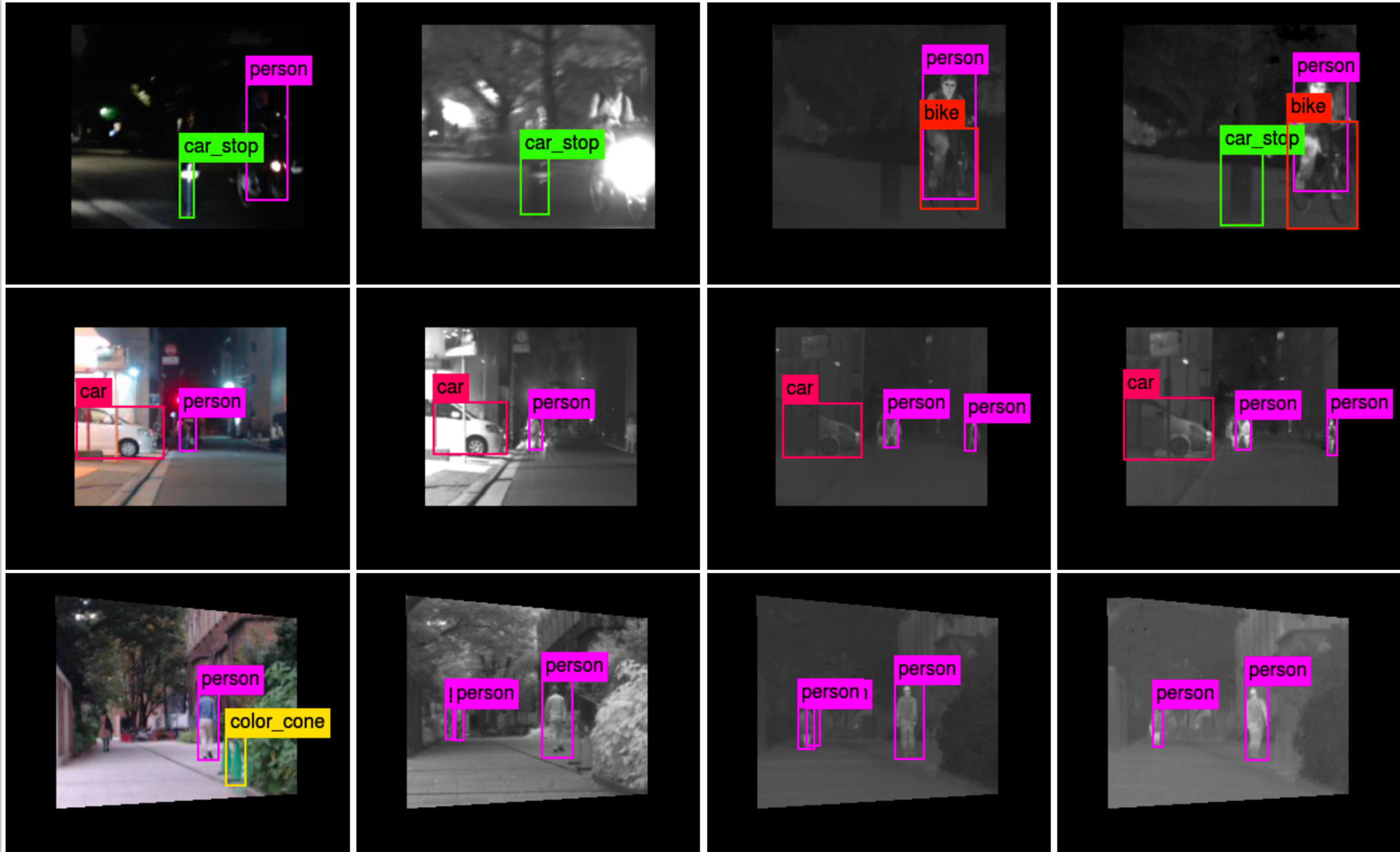

Recently, researchers have actively conducted studies on mobile robot technologies that involve autonomous driving. To implement an automatic mobile robot (e.g., an automated driving vehicle) in traffic, robustly detecting various types of objects such as cars, people, and bicycles in various conditions such as daytime and nighttime is necessary. In this paper, we propose the use of multispectral images as input information for object detection in traffic. Multispectral images are composed of RGB images, near-infrared images, middle-infrared images, and far-infrared images and have multilateral information as a whole. For example, some objects that cannot be visually recognized in the RGB image can be detected in the far-infrared image. To train our multispectral object detection system, we need a multispectral dataset for object detection in traffic. Since such a dataset does not currently exist, in this study we generated our own multispectral dataset. In addition, we propose a multispectral ensemble detection pipeline to fully use the features of multispectral images. The pipeline is divided into two parts: the single-spectral detection model and the ensemble part. We conducted two experiments in this work. In the first experiment, we evaluate our single-spectral object detection model. Our results show that each component in the multispectral image was individually useful for the task of object detection when applied to different types of objects. In the second experiment, we evaluate the entire multispectral object detection system and show that the mean average precision (mAP) of multispectral object detection is 13\% higher than that of RGB-only object detection.

Takumi Karasawa, Kohei Watanabe, Qishen Ha, Antonio Tejero-De-Pablos, Ushiku Yoshitaka and, Tatsuya Harada, "Multispectral Object Detection for Autonomous Vehicles" The 25th Annual ACM International Conference on Multimedia (ACMMM 2017), 2017, (Thematic Workshops)

Contact: karasawa (at) mi.t.u-tokyo.ac.jp, watanabe (at) mi.t.u-tokyo.ac.jp, harada (at) mi.t.u-tokyo.ac.jp

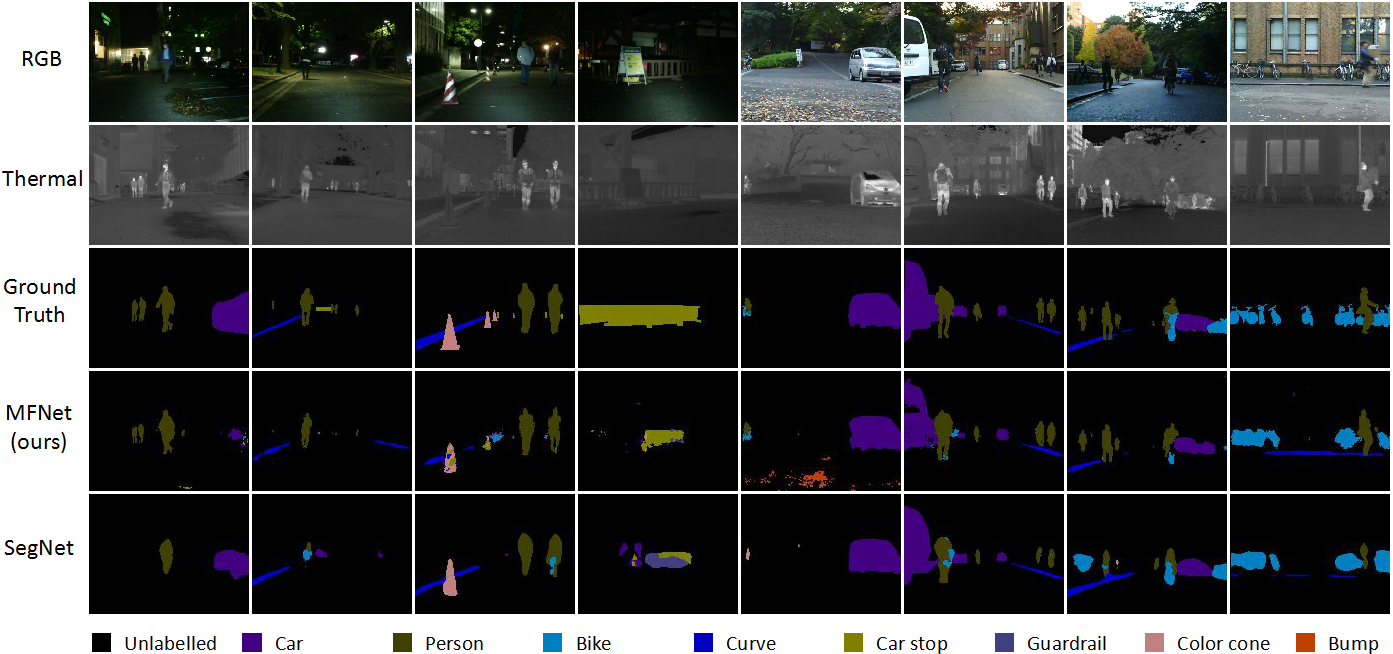

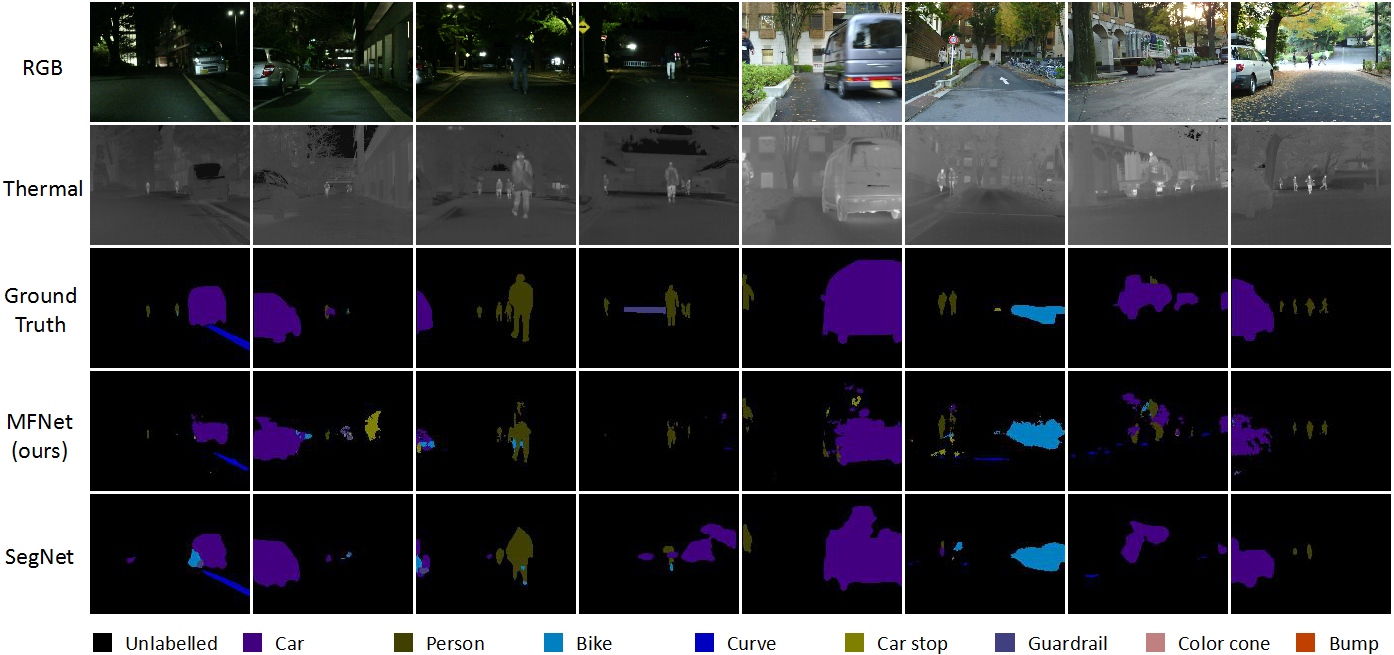

This work addresses the semantic segmentation of images of street scenes for autonomous vehicles based on a new RGB-Thermal dataset, which is also introduced in this paper. An increasing interest in self-driving vehicles has necessitated the adaptation of semantic segmentation for self-driving systems. However, recent research relating to semantic segmentation is mainly based on RGB images acquired during times of poor visibility at night and under adverse weather conditions. Furthermore, most of these methods only focused on improving performance while ignoring time consumption. The aforementioned problems prompted us to propose new convolutional neural network architecture for multi-spectral image segmentation that enables the segmentation accuracy to be retained during real-time operation. We benchmarked our method by creating an RGB-Thermal dataset in which thermal and RGB images are combined. We showed that the segmentation accuracy was significantly increased by adding thermal infrared information.

Qishen Ha, Kohei Watanabe, Takumi Karasawa, Ushiku Yoshitaka and, Tatsuya Harada, "MFNet: Towards Real-Time Semantic Segmentation for Autonomous Vehicles with Multi-Spectral Scenes" the 2017 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS 2017)

Contact: haqishen (at) mi.t.u-tokyo.ac.jp, watanabe (at) mi.t.u-tokyo.ac.jp, harada (at) mi.t.u-tokyo.ac.jp